Make Reading the Bible a New Year Resolution – It Could Change Your Life

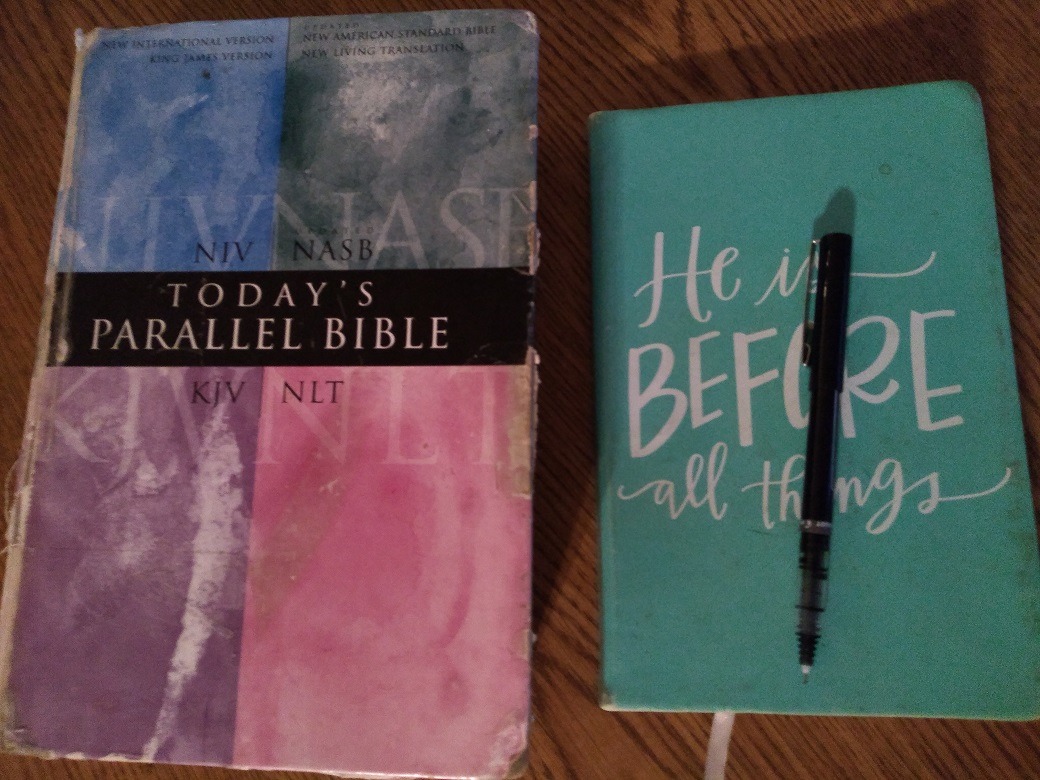

Reading and studying the Bible has literally changed my life. I would not be here today writing what I am writing, on ANY topic on the Health Impact News network, if it were not for my constant studying of the Scriptures. All of us have been conditioned by our culture, through education, media, entertainment, etc. And that conditioning is controlled at the top primarily by Satan and his Kingdom of Darkness. It is sometimes called "brainwashing," and we are all victims of it. God's eternal word as recorded in the Scriptures helps us break through the madness and lunacy that results from the propaganda that bombards us every day. It presents truth to expose the lies in our culture. It shines light into the darkness, exposing the evil deeds done in that darkness. I start out each day with my devotions, where I read a portion of the Bible, write down the key verse or verses God is showing me for that day, and then pray. It is a daily habit I learned early on in my life, and it has brought me to where I am today. Spiritual discernment, and knowledge of the Bible, for example, taught me many years ago that the pharmaceutical industry was Satanic, and that in the Bible the Greek word pharmakeia, from which we get the modern day word "pharmaceutical," is translated by words such as "witchcraft," and "sorcery." I learned early on that I did not have to fear sickness or disease, and I chose not to participate in the medical system, guided by my knowledge of the Bible and God's Spirit of discernment. When I look at the American culture today, I can't help but think that not many know the Bible (in spite of the fact that there are over 6 billion in print!!), and not many are being led by God's Spirit, because Satan and his Kingdom of Darkness is winning the day through deception, big time.